Training ChatGPT involves a deep understanding of its neural network architecture and advanced machine learning techniques like deep learning and transformer architectures. The model learns from massive datasets containing billions of words to understand patterns, grammar, and semantic relationships. Fine-tuning pre-trained models enhances specialized knowledge. Optimizing performance requires linear algebra vector operations for transforming text data into numerical representations. Strategic adaptation methods include data-driven learning, argumentative writing, concept mapping, and vector operations. Practical advice includes using diverse datasets, updating content regularly, employing human reviewers, and collaborating among researchers. Staying informed about cutting-edge research is crucial for ChatGPT's continuous innovation in conversational AI.

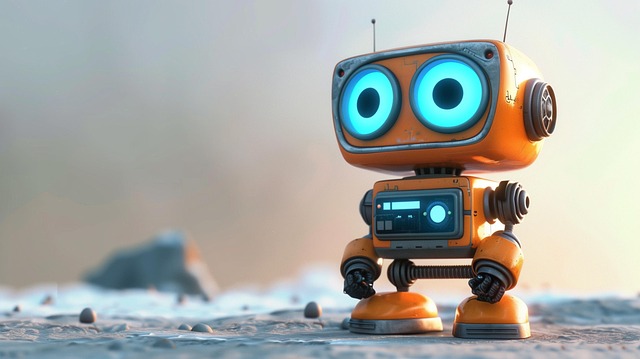

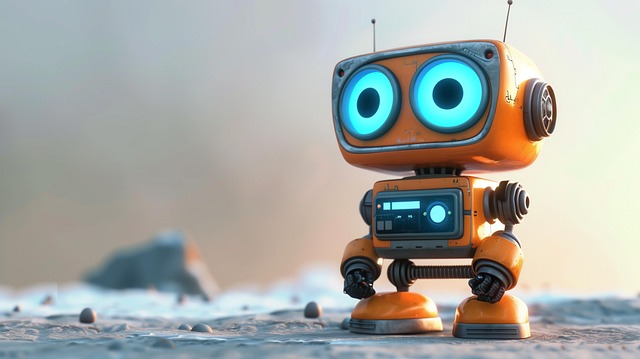

In today’s rapidly evolving digital landscape, the capabilities of chatGPT models have captured the imagination of folks worldwide. These powerful AI assistants are transforming the way we interact with technology. However, training these models to deliver accurate and nuanced responses remains a complex challenge. This article delves into the intricate process of training a chatGPT model, providing a comprehensive guide that demystifies this crucial aspect. We’ll explore best practices, ethical considerations, and cutting-edge techniques to ensure you’re equipped with the knowledge to harness the full potential of these innovative tools.

- Understanding ChatgPT Architecture: Laying the Foundation

- Data Collection and Preparation: Fueling the Model

- Training and Refinement: Unlocking ChatgPT's Potential

Understanding ChatgPT Architecture: Laying the Foundation

Training a ChatGPT model requires a deep understanding of its architecture, a complex web of neural networks designed to process and generate natural language. At its core, ChatGPT leverages advanced machine learning techniques, particularly deep learning and transformer architectures, to understand and produce human-like text. This involves breaking down language into mathematical representations, akin to a calculus concept overview, where each word becomes a data point in a vast vector space.

The model’s training process begins with massive datasets, often containing billions of words from diverse sources like books, articles, and internet texts. These data are used to teach the model patterns, grammar rules, and semantic relationships within language. Creative writing prompts can be particularly effective during this phase, encouraging the model to generate varied and imaginative responses. The adapted teaching methods involve fine-tuning pre-trained models by focusing on specific tasks or domains, allowing for specialized knowledge acquisition.

Understanding the internal workings of ChatGPT is crucial for optimizing its performance. Linear algebra vector operations play a vital role in transforming text data into numerical representations that the model can process. By mastering these concepts, developers can enhance the model’s ability to grasp context, maintain coherence, and generate more accurate responses. This foundational knowledge empowers experts to navigate the intricate landscape of language models and contribute to their continuous evolution.

Data Collection and Preparation: Fueling the Model

Training a ChatGPT model involves an intricate process, and at the heart of it lies the art of data collection and preparation—fueling the model with knowledge and context. This step is pivotal as it dictates the quality and versatility of the AI’s responses. When preparing training data, consider diverse sources to enrich the model’s understanding. For instance, incorporating texts from various domains like music theory fundamentals, philosophy ethics discussions, and even arguments from argumentative writing strategies can adapt teaching methods to cater to a broader range of user queries.

The data preparation process demands meticulous attention. It involves cleaning and structuring the content to ensure consistency and relevance. Inconsistent or biased data can lead to inaccurate responses, reinforcing existing societal biases. Therefore, diverse and high-quality datasets are essential for developing robust AI models. For instance, when training a ChatGPT model on philosophical texts, a comprehensive collection from various schools of thought guarantees exposure to a wide array of ideas, enabling the model to engage in nuanced philosophy ethics discussions.

Moreover, data adaptation is crucial. Preprocessing techniques like text normalization and entity recognition help in transforming raw data into a format suitable for the model’s learning algorithms. This step ensures that the AI can extract meaningful patterns from the training material. For instance, tokenization—splitting texts into individual words or subwords— facilitates efficient processing of complex linguistic structures. By combining these preparation methods, you empower the ChatGPT model to engage in sophisticated conversations on diverse topics, reflecting a genuine understanding of human language and its nuances.

Training and Refinement: Unlocking ChatgPT's Potential

Training and refining a ChatGPT model is an intricate process that demands strategic thinking and methodological precision to unlock its full potential. At its core, this involves adapting teaching methods to the unique capabilities of artificial intelligence (AI). Unlike traditional learning scenarios, training ChatGPT requires a shift towards data-driven approaches, where vast amounts of textual information are utilized to teach the model nuanced language patterns and contextual understanding. One effective strategy is to employ argumentative writing techniques, encouraging the model to construct coherent and persuasive responses by analyzing various perspectives and logical structures.

Concept mapping techniques play a pivotal role in this process. By visualizing knowledge structures, these methods help in organizing information effectively. For instance, creating concept maps for specific topics allows ChatGPT to grasp relationships between ideas, enabling it to generate more relevant and contextually appropriate outputs. This adapted teaching approach enhances the model’s ability to understand complex queries and deliver well-rounded responses. The key lies in refining the training data, ensuring it represents a diverse range of scenarios and language nuances, thereby fostering adaptability and accuracy.

Moreover, incorporating linear algebra vector operations during training can significantly enhance ChatGPT’s performance, especially in tasks that require mathematical reasoning or semantic understanding. Vector representations of words and concepts facilitate efficient processing and manipulation of textual data. By leveraging these mathematical foundations, the model can capture subtle meanings and relationships, resulting in more precise and contextually sensitive responses. Ultimately, continuous refinement through adapted teaching methods and strategic data utilization propels ChatGPT towards delivering exceptional conversational experiences.

Practical advice includes utilizing diverse training datasets, regularly updating content to reflect current trends, and employing human reviewers for quality control. The collective efforts of researchers, developers, and educators are essential in fine-tuning these models, ensuring they adapt to evolving linguistic landscapes. As the field progresses, staying abreast of cutting-edge research and techniques will be vital to keep ChatGPT and similar models at the forefront of conversational AI innovation.

By understanding the core principles of ChatGPT architecture, collecting and preparing diverse datasets, and continually refining models through iterative training, individuals can unlock the full potential of this transformative technology. This article has equipped readers with a solid foundation in training ChatGPT models, offering practical insights into each critical stage. Moving forward, leveraging these strategies will enable developers to create more sophisticated AI assistants, enhancing various applications from customer service to content generation. The key lies in persistent optimization and adaptation, ensuring ChatGPT remains at the forefront of language model innovation.